Sentiment Analysis of My 10 Year Old Blog

One busy day, while running some stuff on the server, I decided to scrape all my entries from an old blog of mine. It contains about 33 entries from 2012-2018. I decided to combine the title with the body of the entry as one text.

Text Wrangling and Pre-processing

- Removed HTML tags via the BeautifulSoup library.

- Expanded contractions c/o the contractions library. Contractions are shortened versions of words or syllables. So instead of having "don't", I should then have "do not".

- Removed special characters by using regular expressions operations via the re library.

- Word stems are known as the base form of a word. So for the word "listening", stemming would transform it to "listen". Stemming is somewhat a crude method as it reduces the word into its stem, even if the word does not exist in the vocabulary. In contrast with stemming, lemmatization looks beyond the stem of each word and instead sees to it that the generated root words adhere to the vocabulary. For this post, I opted for Lemmatization and used the spacey library.

- Removed stop words. Words that do not really contribute to the meaning of the text are known as stopwords. These are normally those words that take up most of the text such as a, an, the and so on.

Below is a word cloud of the cleaned text. I combined the title with the text in the entry.

Sentiment Analysis

I used three pre-trained models/libraries as I don't have sentiment labels in my dataset.

- TextBlob is a popular Python library for processing textual data. It is built on top of NLTK, another popular Natural Language Processing toolbox for Python. TextBlob uses a sentiment lexicon (consisting of predefined words) to assign scores for each word, which are then averaged out using a weighted average to give an overall sentence sentiment score. Three scores: “polarity”, “subjectivity” and “intensity” are calculated for each word.

- “Valence Aware Dictionary and sEntiment Reasoner” is another popular rule-based library for sentiment analysis. Like TextBlob, it uses a sentiment lexicon that contains intensity measures for each word based on human-annotated labels. A key difference however, is that VADER was designed with a focus on social media texts. This means that it puts a lot of emphasis on rules that capture the essence of text typically seen on social media — for example, short sentences with emojis, repetitive vocabulary and copious use of punctuation (such as exclamation marks). Below are some examples of the sentiment intensity scores output by VADER.

- BERT stands for Bidirectional Encoder Representations from Transformers.

- Bidirectional - to understand the text you’re looking you’ll have to look back (at the previous words) and forward (at the next words)

- Transformers - The Attention Is All You Need paper presented the Transformer model. The Transformer reads entire sequences of tokens at once. In a sense, the model is non-directional, while LSTMs read sequentially (left-to-right or right-to-left). The attention mechanism allows for learning contextual relations between words (e.g. his in a sentence refers to Jim).

- (Pre-trained) contextualized word embeddings - The ELMO paper introduced a way to encode words based on their meaning/context. Nails has multiple meanings - fingernails and metal nails.

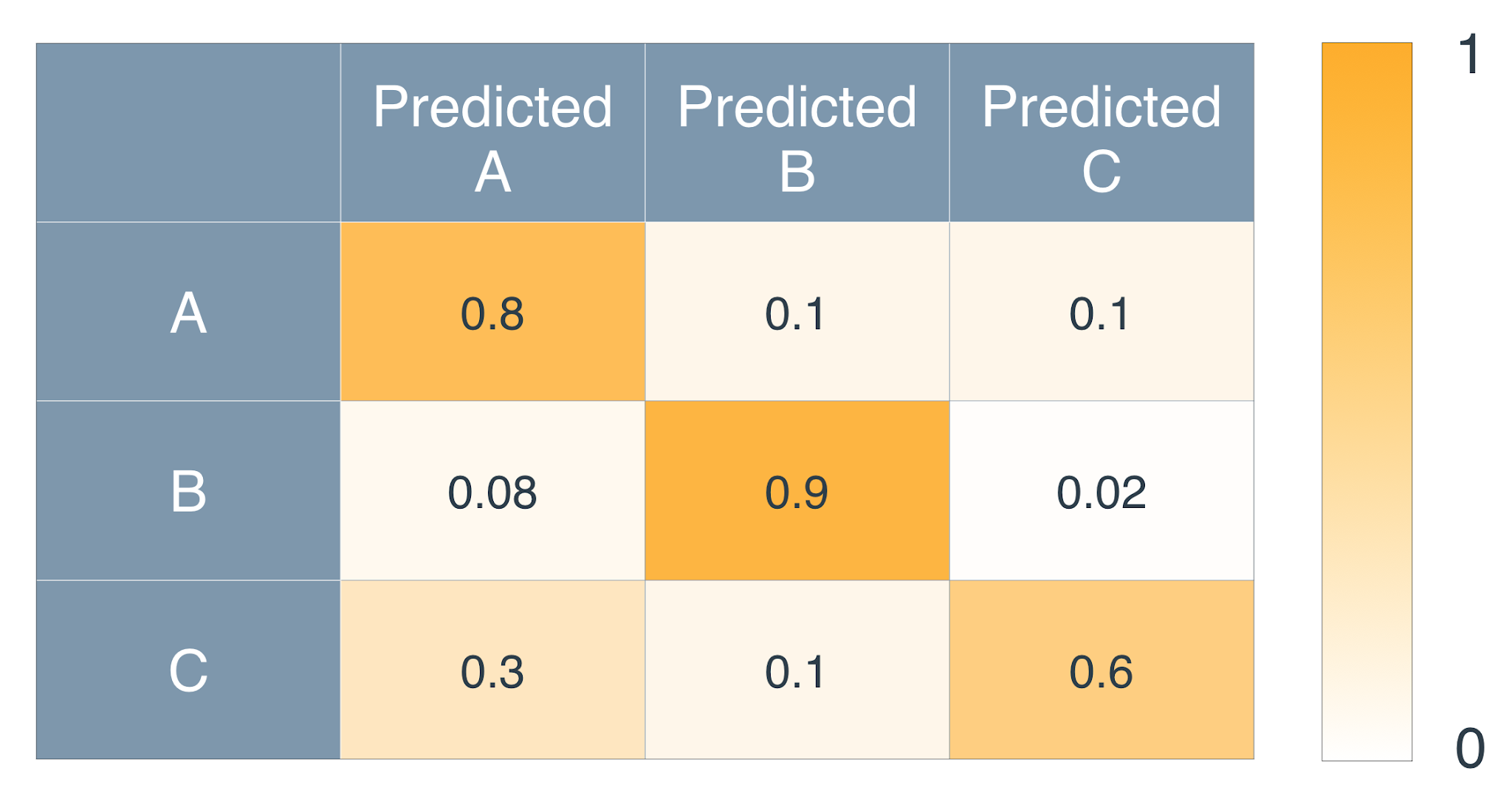

And here are my results!

VADER seems a bit optimistic, while TextBlob is the pessimistic one. Bert sort of balances the two.

So I guess most of my posts back then were a bit on the neutral-positive side. That's good to know I guess.

Comments

Post a Comment